Dealing with duplicate content is definitely one of the toughest challenges for an SEO. Many content management systems handle content well, but they don’t always consider SEO when that content is spread across the site.

There are two types of duplicate content: onsite and offsite. Onsite duplication happens when the same content appears on multiple pages of your own website. Offsite duplication occurs when content from one website shows up on another site. While you might not be able to control offsite duplication, you can manage onsite duplication. Both types can cause problems.

Why is Duplicate Content an Issue?

Before diving into the issues with duplicate content, let’s first talk about why unique content is so important. Unique content sets you apart. It makes your site stand out because it’s one-of-a-kind.

Using the same text as everyone else won’t give you an edge over your competitors. If you have multiple pages with identical content, they won’t help you rank better. In fact, it can hurt your site’s performance. Unique content is what makes a real difference.

Duplicate content can seriously hurt the value of your material. Search engines want to show users fresh, unique content, not multiple pages saying the same thing. When you produce original content, you’re competing with your rivals rather than with yourself.

Here’s how it works: search engines crawl your site, collect content from each page, and store it in their index. If they find a lot of duplicated information, they might not bother indexing all your pages and could instead focus on unique pages from your competitors. So, unique content is key to standing out and getting noticed.

Dealing With Offsite Duplicate Content Problems

Offsite duplicate content usually happens for one of two reasons: either you copied content from someone else, or someone else copied your content. Either way, it can hurt your site’s ability to rank well in search engines. Even if it wasn’t intentional, having the same content on multiple sites can impact your search engine rankings.

Content Scrapers and Thieves

The worst offenders in content theft are those who steal content from the web and post it on their own sites. These sites often end up as a disjointed mess, thrown together just to attract visitors who click on ads scattered throughout the content. Search engines are constantly working to find and remove these low-quality, content-scraping sites.

But not all content theft is done through scraping. Sometimes, people just copy your content and pretend it’s their own. While these sites may not be as bad as scrapers, they can still be harmful. If their stolen content gets a lot of links and the sites are high-quality, it could even hurt your rankings.

While scrapers are usually easy to ignore, some serious cases of theft might need legal action or a DMCA takedown request.

Article Distribution

When you distribute content with the hope that other websites will pick it up and repost it, the goal is usually to get links back to your site. This helps drive traffic and improves your visibility. For example, I write a lot for our E-Marketing Performance blog, and sometimes my work gets republished elsewhere. This is intentional, and I weigh the pros and cons carefully.

Getting links back from other sites is valuable because it boosts my site’s authority and helps me reach a wider audience than my own blog alone. By managing how and where my content gets duplicated, I avoid the problems of mass off-site duplication that can hurt a site’s rankings.

However, there are drawbacks. Once my content is published on other sites, I might lose some of the traffic to my own site. Often, these other sites rank higher in search results because they have more authority.

Despite this, the benefits usually outweigh the drawbacks. But things could change in the future.

There’s also talk about how search engines determine which version of duplicated content is the original and give it more credit. I’ve asked search engine engineers if links to a copied version would count as links to the original if it’s clear which came first.

That would be great if it’s true. Even if the link value is shared equally, I’d be happy as long as search engines still prefer the original content over duplicates, whether the copies are intended to harm or not.

Generic Product Descriptions

Product descriptions often end up being duplicated across many websites. Think about it: when you look at sites selling Blu-Rays, CDs, DVDs, or books, you’ll notice that the product lists are pretty much the same everywhere. The descriptions you see on these sites probably come from the product’s creator, publisher, or manufacturer. Since all these products come from the same source, the descriptions often end up being almost identical.

Imagine the vast amount of duplicate text if you consider millions of products and hundreds of thousands of websites selling them. If every site used the same product descriptions, there’d be enough repeated text to circle the solar system several times!

So, how do search engines handle this when people search for products? They focus on original content. Even if you’re selling the same product as others, writing a unique and engaging description can boost your chances of ranking higher in search results.

But it’s not just about having original content. Search engines also look at the entire webpage, including the site’s authority and the quality of its backlinks. If two sites have the same content, the one with more traffic, better backlinks, and a stronger social media presence will usually rank higher.

While unique product descriptions can give you an edge, they won’t be enough if your site lacks authority compared to competitors. Original content is key, but it also takes time to build a solid online presence. So, while unique content helps, it’s part of a bigger strategy to climb out of the content duplication trap.

Dealing with Onsite Duplicate Content Problems

Duplicate content on your own website is the trickiest kind, but it’s also the one you have the most control over. Dealing with duplicate content on other sites can be challenging, but fixing it within your own site is something you can actively manage.

Usually, duplicate content on a website happens because of poor site structure or website programming. A bad layout can lead to various duplicate content issues that are often tough to spot and fix.

Some people argue that Google’s algorithms are smart enough to handle these issues, saying that Google will sort it out. While it’s true that Google can recognize and handle some duplicate content, there’s no guarantee it will catch everything or fix it as well as you could.

Just because Google is good at what it does doesn’t mean you should rely on it to solve all your problems. If you let Google do all the heavy lifting, you might end up in bigger trouble. It’s better to take charge and reduce the work Google has to do by addressing these issues yourself.

Here are some common problems with duplicate content on your site and how to fix them.

Also Read: How to Recover from Google Penalties

The Problem: Product Categorization Duplication

Many websites use content management systems (CMS) to organize products into categories, each with its own URL. The problem arises when the same product is listed in multiple categories. Each category gets its own URL, creating duplicate URLs for the same product.

For instance, some websites might end up with up to five different URLs for a single product. This duplication is a big issue for search engines. Instead of having a website with 5,000 unique products, it ends up looking like it has 50,000 products. But upon closer inspection, search engines see that most of these pages are duplicates.

This duplication can cause search engines to leave your site during indexing. With so many duplicate pages, search engines are forced to focus their attention elsewhere, which can hurt your rankings.

I remember seeing this problem on The Home Depot’s website a few years ago. To find a specific product, I had to navigate through two separate paths, each leading to a different URL for the same product. Even though the content was identical on both pages, except for the breadcrumb trail, this kind of setup creates redundant content.

If ten people link to each of these duplicate pages, while a competitor gets ten links to a single URL for the same product, the competitor’s page will likely rank higher in search results.

The Solution: Master Categorization

To tackle the issue of duplicate URLs for products in multiple categories, you could make sure that each product only appears in one category. But this can be tricky for your customers who might have trouble finding the product if they’re looking through different categories.

Here are two ways to avoid duplicate content while still using multiple categories. One method is to manually set up unique URL paths for each product. This can be quite time-consuming and may make your directory structure a bit messy. Another approach is to place all products into a single directory, regardless of their category. However, this method can weaken the design of your site and make it harder to reinforce your categorization.

The simplest solution is to assign each product a “master category.” This category will determine the product’s URL. You can then list the product in other categories to give visitors multiple ways to find it. No matter which path they take, the product’s URL will stay the same once they reach it.

To tackle this issue, many programmers block search engines from indexing all URLs except the main one for each product. While this stops duplicate pages from showing up in search results, it doesn’t solve the problem of link splitting.

To tackle this issue, many programmers block search engines from indexing all URLs except the main one for each product. While this stops duplicate pages from showing up in search results, it doesn’t solve the problem of link splitting.

So, any links that point to the blocked URLs don’t pass along their link juice, which means they don’t help improve the product’s ranking in search results.

Band-Aid Solution: Canonical Tags

This fix doesn’t work with every content management system. If your CMS doesn’t support it, you might need to either use a temporary fix or switch to a more search-friendly CMS.

One option is to use canonical tags. These tags tell search engines which URL you want to be considered the “main” version. For example, if you have multiple URLs for the same product, you add a canonical tag to each duplicate URL, pointing to the main one:

<link rel="canonical" href="http://www.thehomedepot.com/building-materials/landscaping/books/123book" />

When you use this tag, search engines should treat the main URL as the primary version and give it the link value, helping to keep the duplicates out of search results.But here’s the thing: the canonical tag is more of a suggestion to search engines. They’ll decide how to use it, which means not all link juice might go to the main URL and some duplicate pages might still show up in the index.

The Problem: Product Summary Duplication

A common example of duplicate content is having brief summaries of product descriptions on various category pages.

Let’s say you’re looking for a Burton snowboard. When you click on the Burton link from the main menu, you see a full catalog of Burton products, with snippets of product descriptions and different filterable subcategories. You select the “snowboards” subcategory, which shows a list of Burton snowboards, each with a brief description.

However, if you go back to the “all snowboards” section, you’ll see snowboards from all brands, including those same Burton snowboards with the same descriptions you’ve already seen!

Category pages are great for ranking in search results (like searches for “Burton snowboards”). But many of these product category pages just list products with short descriptions that repeat across various category pages. This repetition makes these pages less effective and less valuable for search engines.

The Solution: Create Unique Content for All Pages

The goal is for each product category page to stand out and provide real value to visitors. The best way to do this is by writing a unique paragraph or more for each product page. Use this opportunity to highlight what makes Burton snowboards special and share details that might help visitors make a buying decision.

Even if you remove all the products from the category pages, these pages can still be valuable for search engines. As long as the content is useful and original, the page will remain important for indexing, despite any repeated content.

The Problem: Secure/Non-Secure URL Duplication

Duplicate content can be a problem on e-commerce sites that use secure checkout. It’s similar to the multiple URL issue, but here it involves having both a secure and non-secure version of the same URL.

For example:

- http://www.site.com/category/product1/

- https://www.site.com/category/product1/

The key difference is the “s” in “https,” which indicates a secure URL. While product pages don’t generally need to be secure, pages that handle sensitive data do.

This problem often happens when users move from an insecure part of the website to a secure shopping cart. If they continue browsing or make more purchases before checking out, links might use “https” instead of “http,” creating duplicate content issues.

The Solution: Use Absolute Links

Linking products in a customer’s cart back to their product pages is a smart move. But here’s a common issue: many web developers use relative links instead of absolute links for internal URLs.

If you’re not familiar with the terms, a relative link includes just the part of the URL after the domain name (like /about-us/pit-crew/stoney-degeyter/). An absolute link, on the other hand, includes the full URL (like https://www.polepositionmarketing.com/about-us/pit-crew/stoney-degeyter/).

When a customer is on a secure part of the site (using https), relative links will automatically switch to https based on their current location. This can create issues if you want to direct customers to specific product pages, as it might force them to switch from https to http and back.

You might wonder why anyone would use relative links at all. Before modern content management systems, websites were manually coded and managed. During routine updates or structural changes, moving files around was easier with relative links because they updated automatically. Absolute links required manual updates, which could lead to broken links.

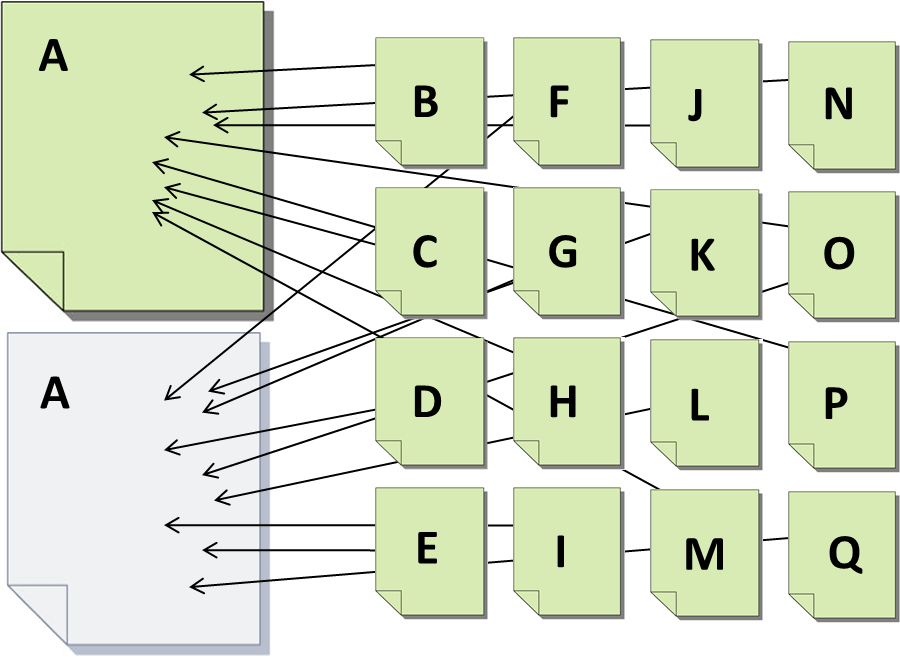

While relative links were once the standard, I recommend using absolute links for product links in shopping carts and for site navigation. This ensures that your links work correctly and consistently, which is crucial for both customers and search engines. The link structure to and from your shopping cart is illustrated in the graphic below.

Ideally, search engines should never access the shopping cart area of your site. You should block these URLs to keep them out. But just blocking these pages isn’t enough. If a visitor moves from a restricted page to an unblocked, secure product page, Google might still pick up that page.

To prevent this, make sure there are no absolute links pointing from secure pages back to your product pages. This way, search engines won’t be able to explore or index these pages.

The Problem: Session ID Duplication

Session IDs can cause some serious problems with duplicate content. They’re designed to track users and keep their shopping carts personalized. Every time someone visits your site, they get a unique ID number added to the URL to keep track of their activity.

Here’s how it works:

- Normal URL:

www.site.com/product - Visitor 1:

www.site.com/product?id=1234567890 - Visitor 2:

www.site.com/product?id=1234567891 - Visitor 3:

www.site.com/product?id=1234567892

Each visitor gets a different session ID, so every page they visit has this ID attached to it. If your site has 50 pages, each with a unique session ID for every visitor, you end up with 50 different URLs per page. If you get 2500 visitors a day, that’s nearly a million unique URLs in a year, all for just 50 pages!

Is that something a search engine would want to index?

The Solution: Don’t Use Session IDs

I’m not a coder, so my technical knowledge has its limits, but here’s what I know: session IDs can be a real pain when it comes to duplicate content. They’re like a sticky mess that just won’t go away. There are better ways to track users without creating all that duplicate data. For example, cookies can track users across different sessions and browsers without causing the same problems.

You and your programmer can figure out the best tracking method for your system. Just know that I’ve flagged session IDs as a no-go.

The Problem: Redundant URL Duplication

The way that pages are accessed in the browser is one of the most fundamental site architecture issues. Although most pages can only be viewed via their main URL, there are certain exceptions, such as when the page is the initial page of a virtual or non-virtual subdirectory.

The picture below serves as an example of this. If you leave any of these URLs unchecked, they all point to the same page with the same information.

This applies to any page (such as www.site.com/page et al.) at the top of a directory structure. That is one page with four different URLs, which causes the website to have duplicate content and divides your link juice.

The Solution: Server Side Redirects and Internal Link Consistency

You can tackle duplicate content in several ways, and I recommend using all of them. Each method has its strengths, but none are perfect on their own. By combining them, you’ll create a solid solution to prevent duplicate content issues!

Server-Side Redirects

One way to fix this problem is by using server-side redirects. For Apache servers, you can use your .htaccess file to redirect non-www URLs to www URLs (or the other way around). I won’t dive deep into the technical details here, but you can check out the link for more info. If you’re using a different server, work with your web host or programmer to set up a similar solution.

This method works whether you prefer having www in your URL or not—just pick your preference and make sure the other option redirects to it.

Internal Link Consistency

Once you’ve decided on your URL format, make sure all your internal links are consistent. If you choose to use www, ensure all your internal links include it. While server-side redirects will handle incorrect links, inconsistencies can lead to duplicate pages being indexed if the redirect fails. I’ve seen redirects break when servers are updated, and the issue can go unnoticed for months, allowing duplicate pages to slip into search results.

Avoid Linking to Page File Names

When linking to a page at the top of a directory or subdirectory, avoid using the file name like /index.html or .php. Instead, link to the root directory. Server-side redirection typically works for the homepage, but not always for subdirectories. Linking directly to the top-level pages’ root avoids potential duplicate pages in search results.

So link to:

www.site.com/www.site.com/subdirectory/

Instead of:

www.site.com/index.htmlwww.site.com/subfolder/index.html

It might seem like overkill to use every one of these methods, but they’re mostly simple and worth the effort. Taking the time to implement them ensures that duplicate content issues will be effectively addressed.

The Problem: Historical File Duplication

Even though it might not seem like a typical duplicate content issue, it definitely can be one. Websites often go through multiple design updates, revisions, and complete overhauls over time. As things get rearranged, duplicated, moved, and tested, it’s easy to unintentionally create duplicate content. I’ve seen developers completely change a website’s directory structure and then upload it without deleting or redirecting the old content.

The problem gets worse when internal links aren’t updated to point to the new URLs. I once spent over five hours fixing broken links that were still pointing to old files after developers launched the “finished” new site!

Search engines will keep indexing these outdated pages as long as they’re on the server and still linked to. This results in the old and new pages competing for search engine rankings.

The Solution: Delete Files and Fix Broken Links

You won’t get much out of a broken link check if you haven’t removed outdated files from your server first. So let’s start there. Make sure you back up your website before deleting any pages, just in case you accidentally remove something important. After cleaning up, use a tool like Xenu Link Sleuth to check for broken links.

The tool will give you a report showing which links are broken and where they should point to now. Use this report to fix the links and then run the check again. You might need to do this several times—sometimes up to 20—to make sure all the issues are fixed. It’s a good idea to run these checks regularly to catch any new problems.

Not all duplicate content will ruin your on-site SEO, but it can definitely keep your website from performing at its best. Your biggest issue with duplicate content is likely from your competitors’ sites, not your own. Try to remove any duplicate content from your site and replace it with original, valuable content. This will give you an edge over competitors who don’t manage their duplicate content as well.

Pingback: Why Site Load Speed Matters for SEO